Okay, let’s make this sound a little geeky: https://soundcloud.com/larisa-nikolskya/tchaikovsky

When I was a student, I considered deep learning models as independent entities where I just had to focus on building consistent and healthy models capable of giving reasonable predictions. But that was the utopic situation. AI is more powerful when it is implemented within a solid context.

In this blog post, I will share with you some output that I found interesting during the AI & Big Data Expo Europe 2019

I – The massive data illusion:

It is usually understood that the more data you have, the better you can train your model, and of course, make better use of AI.

Emilio Billi, CTO of A3Cube Inc, made a presentation under the title: Why are algorithms that were basically invented in the 1950s through the 1980s only now causing such a transformation of business and society, and explained, how computational power is as important as an efficient algorithm. In one of the slides, he mentioned quantum computing which seems to be promising combined with AI.

“To be able to unleash all the potential that AI can bring to us, we need to implement new architectures where computational power and data access are maximized.”

Emilio Billi

In case you’re interested to learn more about Quantum Computing, I suggest this TED talk by Shohini Ghose: https://www.youtube.com/watch?v=QuR969uMICM

II- Always start with a PoC:

In most cases where AI is invoked, the data that engineers need to start with is not clean enough, and in most cases requires a lot of preparation and pre-processing, and that of course is based on the way the problem it will be used to solve is going to be approached.

Wonderware in their slides, presented some of the technical and business challenges they face and explained the important initial step of approaching any AI project, summing that in an Exploration phase, and Basecamp.

The main life-cycle parts of a PoC can be resumed in these steps:

Exploration: PoC with a limited scope

- Kick-off: Introduction, use case and available data

- Execution: Data model, dashboards, workshops and remote sessions

- Feedback: For adjustments

- Delivery: End result, insights and next steps

Basecamp: Extended Proof Of Concept

This is the phase where the analytical platform is effectively launched.

III- Productionisation:

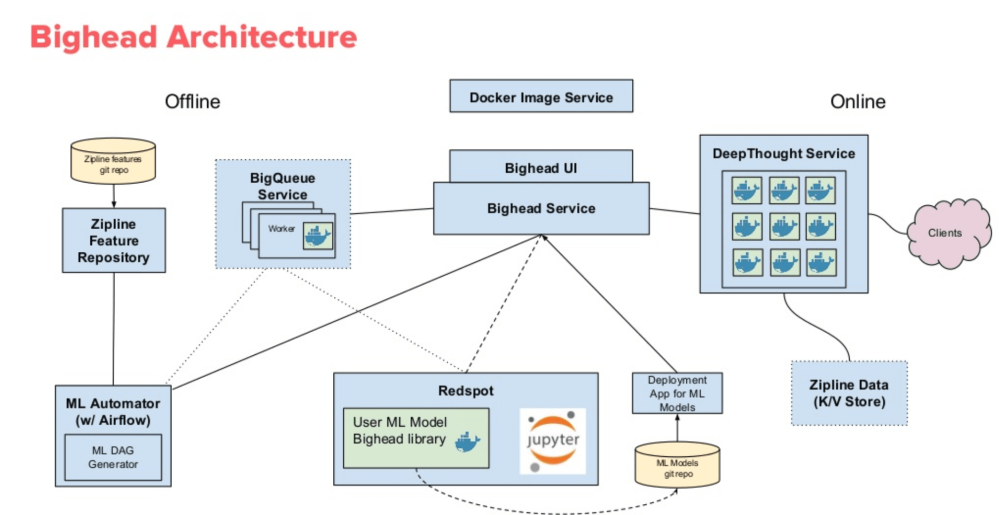

One of the presentations that I really found interesting was Airbnb’s, as they presented how they approach AI through BigHead: An end-to-end machine learning platform. And how it allows them to put their AI models to production as easy and simple as possible.

You can actually find an older version of the slides presenting their AI infrastructure philosophy through this link.

I’m looking forward to hearing your feedback.

Cheers,

Nessie